- Topics

- /

- Event-driven servers

- &Architectural patterns

- /

- Apache Kafka and Ably: Building scalable, dependable chat applications

Apache Kafka and Ably: Building scalable, dependable chat applications

In this blog post, we will look at Apache Kafka’s characteristics and explore why it’s such a reliable choice as the backend, event-driven pipeline of your chat system. We will then see how Ably complements and extends Kafka beyond your private network, to end-users at the edge, enabling you to create chat apps that are dependable at scale.

From the early days of ICQ and other similar tools, chat has always been a part of the appeal of the internet. Nowadays, we all use platforms like WhatsApp, Facebook Messenger, Snapchat, or Telegram daily to keep in touch with friends and family. In addition, solutions such as Slack, Intercom, Microsoft Teams, and HubSpot have demonstrated the value of chat in a business environment, where it empowers remote teams to communicate and collaborate, and is often used as a support channel for customers.

If you’re planning to build chat features, you probably already know that engineering a system you can trust to deliver at scale is by no means an easy feat. Many chat products have to serve a very high and quickly changing number of people - between thousands and millions of concurrent users, some “chattier” than others, and some with fluctuating connectivity. This makes providing dependable chat functionality that is reliably available and delights end-users a complex challenge. Failing to do it right leads to user dissatisfaction, as demonstrated by publicly available reviews of various chat apps:

Going beyond user dissatisfaction, a poorly engineered chat solution can prevent teams from communicating and collaborating efficiently: just imagine what can happen when the chat platform you are using company-wide (for both internal comms, but also to provide customer support) crashes and is unavailable for hours. This can lead to financial losses and missed opportunities. Or think how confusing it can be if chat messages are delivered to recipients out of order, or at most once, rather than exactly once.

There are many aspects to consider when building dependable chat systems. One of the most important decisions you’ll have to make is what technologies to use. When it comes to building scalable, dependable systems (chat or otherwise), Apache Kafka is one of the most popular and reliable solutions worth including in your tech stack.

Why Kafka is an excellent choice for chat apps

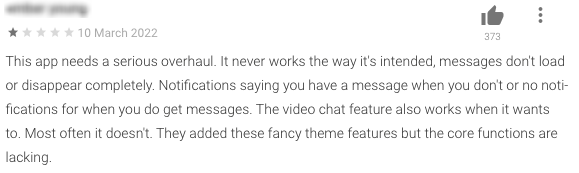

Created about a decade ago, Kafka is a widely adopted event streaming solution used for building high-performance, event-driven pipelines. Kafka uses the pub/sub pattern and acts as a broker to enable asynchronous event-driven communication between various backend components of a system.

Let's now briefly summarize Kafka's key concepts.

Events

Events (also known as records or messages) are Kafka's smallest building blocks. To describe it in the simplest way possible, an event records that something relevant has happened. For example, a user has sent a message, or someone has joined a chat room. At the very minimum, a Kafka event consists of a key, a value, and a timestamp. Optionally, it may also contain metadata headers. Here's a basic example of an event:

{

"key": "John Doe",

"value": "Has joined the ‘Holiday plans’ chat room",

"timestamp": "Mar 20, 2022, at 15:45 PM"

}Topics

A topic is an ordered sequence of events stored durably, for as long as needed. The various components of your backend ecosystem can write and read events to and from topics. It's worth mentioning that each topic consists of multiple partitions. The benefit is that partitioning allows you to parallelize a topic by splitting its data across multiple Kafka brokers. This means that each partition can be placed on a separate machine, which is great from a scalability point of view since various services within your system can read and write data from and to multiple brokers at the same time.

Producers and consumers

Producers are services that publish (write) to Kafka topics, while consumers subscribe to Kafka topics to consume (read) events. Since Kafka is a pub/sub solution, producers and consumers are entirely decoupled.

Producers and consumers writing and reading events from Kafka topics

Kafka ecosystem

To enhance and complement its core event streaming capabilities, Kafka leverages a rich ecosystem, with additional components and APIs, like Kafka Streams, ksqlDB, and Kafka Connect.

Kafka Streams enables you to build realtime backend apps and microservices, where the input and output data are stored in Kafka clusters. Kafka Streams is used to process (group, aggregate, filter, and enrich) streams of data in realtime.

ksqlDB is a database designed specifically for stream processing apps. You can use ksqlDB to build applications from Kafka topics by using only SQL statements and queries. As ksqlDB is built on Kafka Streams, any ksqlDB application communicates with a Kafka cluster like any other Kafka Streams application.

Kafka Connect is a tool designed for reliably moving large volumes of data between Kafka and other systems, such as Elasticsearch, Hadoop, or MongoDB. So-called “connectors” are used to transfer data in and out of Kafka. There are two types of connectors:

Sink connector. Used for streaming data from Kafka topics into another system.

Source connector. Used for ingesting data from another system into Kafka.

Kafka’s characteristics

Kafka displays robust characteristics, suitable for developing dependable chat functionality:

Low latency. Kafka provides very low end-to-end latency, even when high throughputs and large volumes of data are involved.

Scalability & high throughput. Large Kafka clusters can scale to hundreds of brokers and thousands of partitions, successfully handling trillions of messages and petabytes of data.

Data integrity. Kafka guarantees message delivery & ordering and provides exactly-once processing capabilities (note that Kafka provides at-least-once guarantees by default, so you will have to configure it to display an exactly-once behavior).

Durability. Persisting data for longer periods of time is crucial for certain use cases, such as chat history. Fortunately, Kafka is highly durable and can persist all messages to disk for as long as needed.

High availability. Kafka is designed with failure in mind and failover capabilities. Kafka achieves high availability by replicating the log for each topic's partitions across a configurable number of brokers. Note that replicas can live in different data centers, across different regions.

Connecting your Kafka pipeline to end-user devices

Kafka is a crucial component to having an event-driven, time-ordered, highly available, and fault-tolerant space of data. A key thing to bear in mind - Kafka is designed to operate in private networks (intranet), enabling streams of data to flow between microservices, databases, and other types of components within your backend ecosystem.

Kafka is not designed or optimized to distribute and ingest events over the public internet. So, the question is, how do you connect your Kafka pipeline to end-users at the network edge?

The solution is to use Kafka in combination with an intelligent internet-facing messaging layer built specifically for communication over the public internet. Ideally, this messaging layer should provide the same level of guarantees and display similar characteristics to Kafka (low-latency, data integrity, durability, scalability and high availability, etc.). You don’t want to degrade the overall dependability of your system by pairing Kafka with a less reliable internet-facing messaging layer.

You could arguably build your very own internet-facing messaging layer to bridge the gap between Kafka and chat users. However, developing your proprietary solution is not always a viable option — architecting and maintaining a dependable messaging layer is a massive, complex, and costly undertaking. It’s often more convenient and less risky to use an established existing solution.

Use case: chat apps with Kafka and Ably

Ably is a far-edge pub/sub messaging platform. In a way, Ably is the public internet-facing equivalent of your backend Kafka pipeline. Our platform offers a scalable, dependable, and secure way to distribute and collect low-latency messages (events) to and from client devices via a fault-tolerant, autoscaling global edge network. We deliver 750+ billion messages (from Kafka and many other systems) to more than 300 million end-users each month.

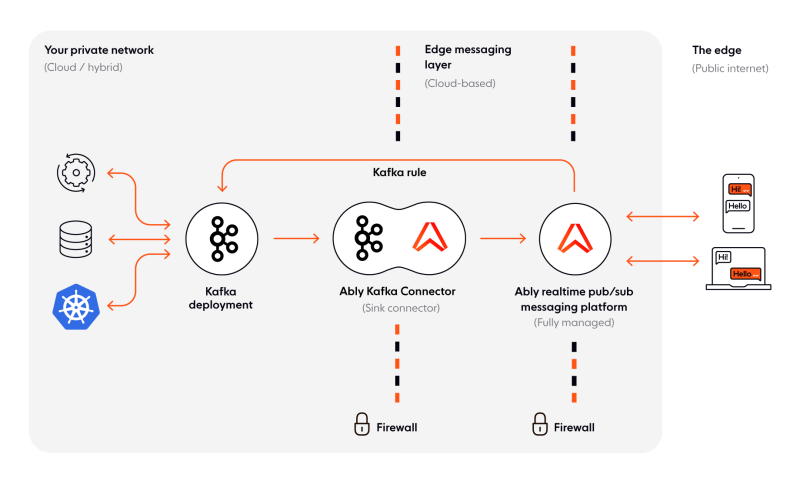

How Ably and Kafka work together to deliver scalable, dependable chat features

Connecting your Kafka deployment to Ably is a straightforward affair. This is made possible with the help of the Ably Kafka Connector, a sink connector built on top of Kafka Connect. The Ably Kafka Connector provides a ready-made integration that enables you to publish data from Kafka topics into Ably channels with ease and speed. You can install the Ably Kafka Connector from GitHub, or the Confluent Hub, where it’s available as a Verified Gold Connector.

To demonstrate how straightforward it is to use the Ably Kafka Connector, here’s an example of how data is consumed from Kafka and published to Ably:

{

"name": "ChannelSinkConnectorConnector_0",

"config": {

"connector.class": "com.ably.kafka.connect.ChannelSinkConnector",

"key.converter": "org.apache.kafka.connect.converters.ByteArrayConverter",

"value.converter": "org.apache.kafka.connect.converters.ByteArrayConverter",

"topics": "outbound:chat",

"channel": "inbound:chat",

"client.key": "My_API_Key",

"client.id": "kafkaconnector1"

}

}This is how client devices (chat users in our case) send messages via Ably:

const sendChatMessage = (messageText) => {

channel.publish({ name: "chat-message", data: messageText });

setMessageText("");

inputBox.focus();

}And here’s what you do to display received messages in the UI:

const messages = receivedMessages.map((message, index) => {

const author = message.connectionId === ably.connection.id ? "me" : "other";

return <span key={index} className={styles.message} data-author={author}>{message.data}</span>;

});Note that the two code snippets above are just a Next.js (React) example. See our complete guide about building a chat app with Next.js, Vercel, and Ably for more details.

Ably works with many other technologies, not just React. We provide 25+ client library SDKs, targeting every major web and mobile platform. This means that almost regardless of what tech stack you are using on the client-side, Ably can help broker the flow of data from your Kafka backend to chat users.

Additionally, Ably makes it easy to stream data generated by end-users back into your Kafka deployment at any scale, with the help of the Kafka rule.

The benefits of using Ably alongside Kafka for chat

We will now cover the main benefits you gain by using Ably together with Kafka to build production-ready chat functionality.

Dependability at scale

As we discussed earlier in the article, Kafka displays dependable characteristics that make it desirable as the streaming backbone of any chat system: low latency, data integrity guarantees, durability, high availability.

Ably matches, enhances, and extends Kafka’s capabilities beyond your private network, to public internet-facing devices. Our platform is underpinned by Four Pillars of Dependability, a mathematically modeled approach to system design that guarantees critical functionality at scale and provides unmatched quality of service guarantees. This enables you to create dependable digital experiences that will delight your end-users.

Let’s now see what each pillar entails:

Performance. Predictability of low latencies to provide certainty in uncertain operating conditions. This translates to <65 ms round trip latency for 99th percentile. Find out more about Ably’s performance.

Integrity. Message ordering, guaranteed delivery, and exactly-once semantics are ensured, from the moment a message is published to Ably, all the way to its delivery to consumers. Find out more about Ably’s integrity guarantees.

Reliability. Fault tolerance at regional and global levels so we can survive multiple failures without outages. 99.999999% message survivability for instance and data center failure. Find out more about Ably’s reliability.

Availability. Ably is meticulously designed to be elastic and highly available. We provide 50% capacity margin for instant surges and a 99.999% uptime SLA. Find out more about Ably’s high availability.

Flexible message routing & robust security

Since Kafka is designed for machine-to-machine communication within a secure network, it doesn’t provide adequate mechanisms to route and distribute events to client devices across firewalls.

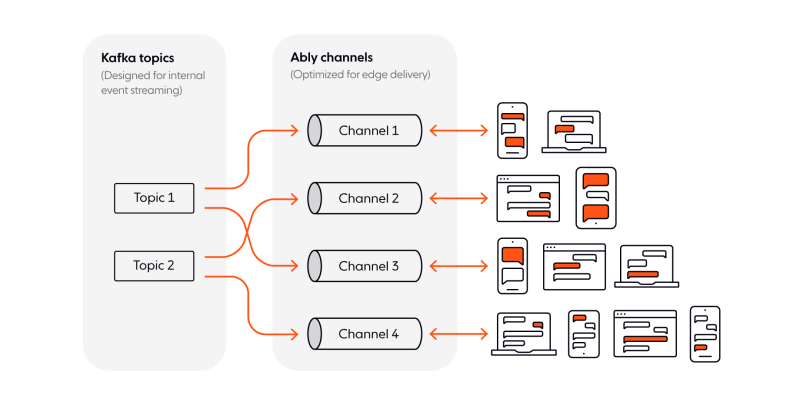

First of all, Kafka works best with a predictable and relatively limited number of topics, and having a 1:1 mapping between client devices and Kafka topics is not scalable. Therefore, you will have topics that store data pertaining to multiple users. However, a client device should only be allowed to receive Kafka data that is relevant for that user/device. But the client doesn’t know the exact topic it needs to receive information from, and Kafka doesn’t have a mechanism that can help with this.

Secondly, even if Kafka was designed to stream data to end-users, allowing client devices to connect directly to topics raises serious security concerns. You don’t want your event streaming and stream processing pipeline to be exposed directly to public internet-facing clients.

By using Ably, you decouple your backend Kafka deployment from chat users, and gain the following benefits:

Flexible routing of messages from Kafka topics to Ably channels, which are optimized for delivering data across firewalls to end-user devices. This is done with the help of the Ably Kafka Connector.

Enhanced security, as client devices subscribe to relevant Ably channels instead of subscribing directly to Kafka topics.

Kafka topics and Ably channels

Additionally, Ably provides multiple security mechanisms suitable for data distribution across network boundaries, from network-level attack mitigation to individual message-level encryption:

SSL/TLS and 256-bit AES encryption

Flexible authentication (API keys and tokens), with fine-grained access control

Privilege-based access

DoS protection & rate limiting

Compliance with information security standards and laws, such as SOC 2 Type 2, HIPAA, and EU GDPR

Scalable pub/sub messaging optimized for end-users at the edge

As a backend pub/sub event streaming solution, Kafka works best with a low number of topics, and a limited and predictable number of producers and consumers, within a secure network. Kafka is not optimized to deliver data directly to an unknown, but potentially very high and rapidly changing number of end-user devices over the public internet, which is a volatile and unpredictable source of traffic.

In comparison, Ably’s equivalent of Kafka topics, called channels, are optimized for cross-network communication. Our platform is designed to be dynamically elastic and highly available. Ably can quickly scale horizontally to as many channels as needed, to support millions of concurrent subscribers - with no need to manually provision capacity.

Ably provides rich features that enable you to build seamless and dependable chat functionality. Among them:

Message ordering, delivery, and exactly-once guarantees. No chat message is ever lost, delivered multiple times, or out of order.

Connection recovery with stream resume. Even if brief disconnections are involved (for example, a chat user switches networks or goes through a tunnel), our client SDKs automatically re-establish connections, and the stream of messages resumes precisely where it left off.

Push notifications. Deliver native push notifications directly to iOS and Android users, even when a device isn’t online or connected to Ably. Additionally, you can broadcast push notifications to every active device subscribed to an Ably channel.

Message history. We retain two minutes of message history as a default, so if clients disconnect they can properly resume their conversations upon reconnection. You can also choose to persist messages for up to 72 hours.

Presence. Enables client devices to be aware of other clients entering, leaving, or updating their state on channels. This enables chat users to keep track of other people coming online and going offline.

Multi-protocol support. Ably’s native protocol is WebSocket-based, but we also support other protocols, such as Server-Sent Events and MQTT, so you can choose the right one for your use case.

Reduced infrastructure and DevOps burden, faster time to market

Building and maintaining your own internet-facing messaging layer is difficult, time-consuming, and involves significant DevOps and engineering resources.

By using Ably as the public internet-facing messaging layer, you can focus on developing new features and improving your chat system instead of managing and scaling infrastructure. That’s because Ably is a fully managed cloud-native solution, helping you simplify engineering and code complexity, increase development velocity, and speed up time to market.

To learn more about how Ably helps reduce infrastructure, engineering, and DevOps-related costs for organizations spanning various industries, have a look at our case studies.

You might be especially interested in the Experity case study - although not a chat functionality provider, their use case demonstrates how well Kafka and Ably work together. Experity provides technology solutions for the healthcare industry. One of their core products is a BI dashboard that enables urgent care providers to drive efficiency and enhance patient care in realtime. The data behind Experity’s dashboard is drawn from multiple sources and processed in Kafka.

Experity decided to use Ably as their internet-facing messaging layer because our platform works seamlessly with Kafka to stream mission-critical and time-sensitive realtime data to end-user devices. Ably extends and enhances Kafka’s guarantees around speed, reliability, integrity, and performance. Furthermore, Ably frees Experity from managing complex realtime infrastructure designed for last-mile delivery. This saves Experity hundreds of hours of development time and enables the organization to channel its resources and focus on building its core offerings.

Here are some additional case studies, showing the benefits of using Ably for building scalable, dependable chat functionality:

HubSpot case study. HubSpot offers a fully-featured platform of marketing, sales, customer service, and CRM software. Ably provides a trustworthy realtime messaging layer for HubSpot, powering key features such as chat.

Homestay.com case study. Homestay.com is an online accommodation platform that’s used by more than 200.000 people. Among its features, Homestay.com provides group and one-to-one chat, powered by Ably.

Guild case study. Guild is a messaging app purpose-built for professional use. By building on Ably, Guild can provide tailor-made, instant communication features for different types of users.

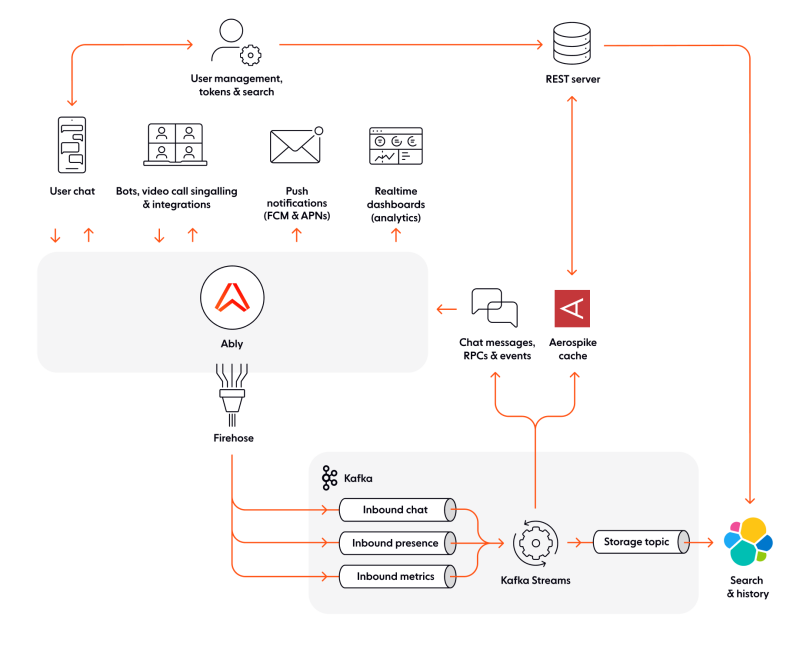

Chat architecture with Kafka and Ably

In this section we will look at two chat architecture examples: one with Kafka, and a second one with Kafka and Ably. Before we dive in, it’s worth mentioning that the two examples also contain components such as Spring, Aerospike, and Elasticsearch. We have included these three technologies because they are often used to deliver enterprise-grade software, and to make things more relatable. However, their use is indicative, not prescriptive.

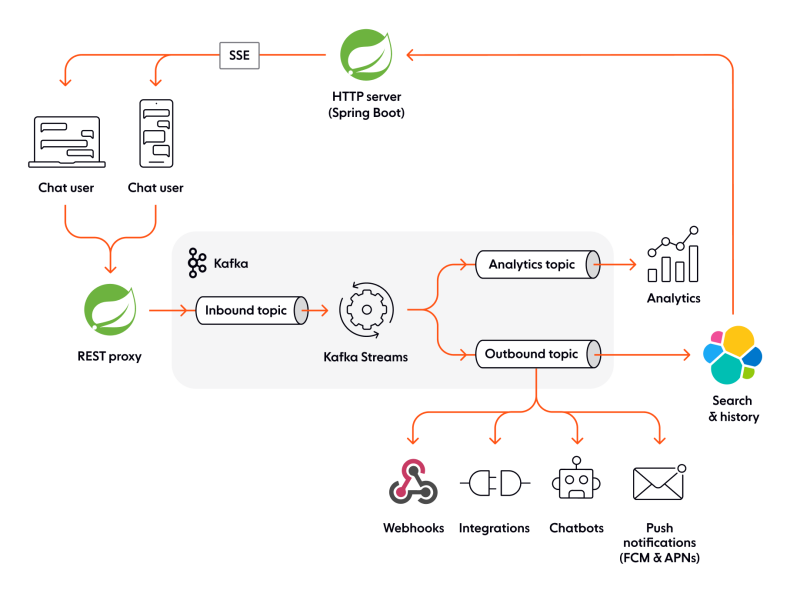

Now, let’s start by looking at the first chat architecture example, powered by Kafka.

Chat powered by Kafka

Whenever a user sends a chat message or loads chat history, a request is sent to a REST proxy. The proxy then writes the payload of the request to the inbound Kafka topic (note that this Kafka topic contains data pertaining to all users of the chat system). Kafka Streams workers are used to process all the data from the inbound topic, with the output being written to the analytics and outbound Kafka topics.

Data stored in the outbound topic is written to a search and message history database (Elasticsearch in this example) for persistent storage. A Spring Boot application consumes from the database and sends updates (chat messages and the chat history) to recipients over Server-Sent Events. Additionally, other systems that integrate with Kafka can consume events stored in the outbound Kafka topic. The data in this outbound topic can also be used for push notifications and chatbots.

Data in the analytics topic is used to power chat-related reports and analytics; in a business environment, where the chat is used for customer service, this could mean things like chat duration, average response time, or the total number of chat conversations.

The main disadvantage of this architecture is the use of HTTP components to intermediate the flow of data between the Kafka backend and chat users. First of all, there are no strong data integrity guarantees. For example, if a chat user quickly sends two chat messages one after another, we have two requests.

If the payload of the first request is significantly larger, there’s a risk it might be processed after the second request. In effect, this would lead to the two chat messages being delivered in the wrong order to the recipient(s). Furthermore, unstable network conditions could also lead to some requests never reaching the proxy server. If this happens, some chat messages may never be delivered to recipients. This, of course, is confusing and frustrating for any user. More than that, it’s completely unacceptable for certain use cases, such as a patient asking a doctor for medical advice, or sending through their health insurance details.

Going beyond the fragile data integrity guarantees, the system is expensive and hard to scale. Even a basic 1:1 chat, where each participant sends and receives just one chat message, requires two connections per user: one to send a chat message (via the REST proxy), and another one to receive a message (over Server-Sent Events). That’s four connections in total. Now imagine how much computing power you need to serve thousands or even millions of concurrent chat users, and how hard it is to scale your system reliably, so you can provide a dependable experience for all of them.

Let’s now look at how you might architect your chat system with Kafka and Ably.

Chat powered by Kafka + Ably

Ably replaces the HTTP server and REST proxy used in the first example, simplifying your architecture, as there is only one public internet-facing layer for handling all incoming and outgoing chat messages. All data sent to Ably is streamed into Kafka for processing. Note that Ably can collect and publish different types of data into Kafka, from chat messages to presence events (such as someone joining a chat room). Similar to the first example, Kafka Streams workers are used to process data from the inbound Kafka topics.

Once processed, chat messages and presence events are sent to Ably channels for distribution to end-user devices. There is an Aerospike cache used to store user account data (such as user profiles, passwords, etc.). User search and message history are written by Kafka Streams workers to a storage topic, and then sent to Elasticsearch. End-users perform account-specific operations (such as changing their passwords), and retrieve search and message history through a REST server.

Compared to the first example, the architecture with Kafka and Ably scales much better. First of all, Ably uses WebSockets under the hood, which means that a user can send and receive chat messages over the same connection, rather than having to open multiple connections. Secondly, Ably is a fully managed solution that can autoscale to as many channels as needed to handle millions of concurrent subscribers. This enables you to keep things simple by removing all the complexity of managing and scaling infrastructure for realtime, event-driven communication over the public internet.

Another issue from the first architecture example is the weak data integrity guarantees (messages being delivered out of order, or potentially not at all). With Ably, you can rest assured that you won’t have to deal with this shortcoming. As previously mentioned in this article, Ably guarantees ordering, delivery, and exactly-once semantics from the moment an event is published to Ably, all the way to its delivery to consumers. Even if brief disconnections are involved (for example, a user switches from a mobile network to Wi-Fi), our client SDKs automatically re-establish connections and ensure stream continuity.

Going beyond live chat, Ably can be used to send push notifications to offline chat users. Our platform can also power live dashboards - useful for your internal teams if you are providing customer support over chat, and you want to have up-to-date analytics (e.g., average response time).

If you’re planning to add video support to your chat app, you’ll most likely have to use something like WebRTC, which allows for peer-to-peer communication. You’d still need servers so that clients can exchange metadata to coordinate communication through a process called signaling. The WebRTC API itself doesn’t offer a signaling mechanism, but you can use Ably to quickly implement dependable signaling mechanisms for WebRTC.

What next?

We hope this article has helped you understand the benefits of combining Kafka and Ably when you want to engineer scalable, dependable chat applications for millions of concurrently-connected users.

Kafka is often used as the backend event streaming backbone of large-scale event-driven systems. Ably is a far-edge pub/sub messaging platform that seamlessly extends Kafka across firewalls. Ably matches and enhances Kafka’s capabilities, offering a scalable, dependable, and secure way to distribute and collect low-latency messages (events) to and from client devices over a global edge network, at consistently low latencies - without any need for you to manage or scale infrastructure.

Although the use case we’ve covered in this article focuses on chat, you can easily combine Kafka and Ably to power various live and collaborative digital experiences, such as realtime asset tracking and live transit updates, live dashboards, live scores, and interactive features like live polls, quizzes, and Q&As for virtual events. In any scenario where time-sensitive data needs to be processed and must flow between the data center and client devices at the network edge in (milli)seconds, Kafka and Ably can help.

Find out more about how Ably helps you effortlessly and reliably extend your Kafka pipeline to end-users at the edge, or get in touch and let’s chat about how we can help you maximize the value of your Kafka pipeline.

Ably and Kafka resources

Confluent Blog: Building a Dependable Real-Time Betting App with Confluent Cloud and Ably

How to stream Kafka messages to Internet-facing clients over WebSockets

Building a realtime ticket booking solution with Kafka, FastAPI, and Ably

Introducing the Fully Featured Scalable Chat App, by Ably’s DevRel Team

Recommended Articles

Google Cloud Pub/Sub

Google Cloud Pub/Sub is a pub/sub-based messaging service.

RethinkDB

Learn about RethinkDB, an open-source scalable JSON DB management system for realtime web applications that require continuously updated query results.

Microsoft Azure Service Bus

Learn about Microsoft Azure Service Bus, a messaging solution sending data between decoupled systems, including apps hosted in public and private clouds.